The ELK-Stack is a good option to aggregate and visualize distributed logging-data. It basically based on

The core of the most ELK applications is the Logstash configuration. A user defines here which data (inputs) is processed, how (filter) the data is processed and where it will go afterwards (outputs). Especilly this configuration contains a lot of logic which is unfortunally not easy to test. In this article I want to show you how to setup a testing environment for your Logstash configuration.

To keep it simple we’ll just focus on the setup. Therefore we neither create complex tests nor a too large szenario. Our szenario is basically to ship some apache log events to a Elasticsearch instance and verify it with Citrus.

We’ll use sample data from monitorwave.com

# Just reading from a log files which are supposed to be generated by a apache webserver

input {

file {

path => ["/data/*.log"]

start_position => ["beginning"]

}

}

# Using the default grok to filter the pache log events

filter {

grok {

match => {

"message" => '%{COMBINEDAPACHELOG}'

}

}

}

# push it to Elasticsearch and the stdout (this could be omitted but is helpful for debugging)

output {

stdout { codec => rubydebug }

elasticsearch {

hosts => ["elasticsearch:9200"]

index => "my-index"

}

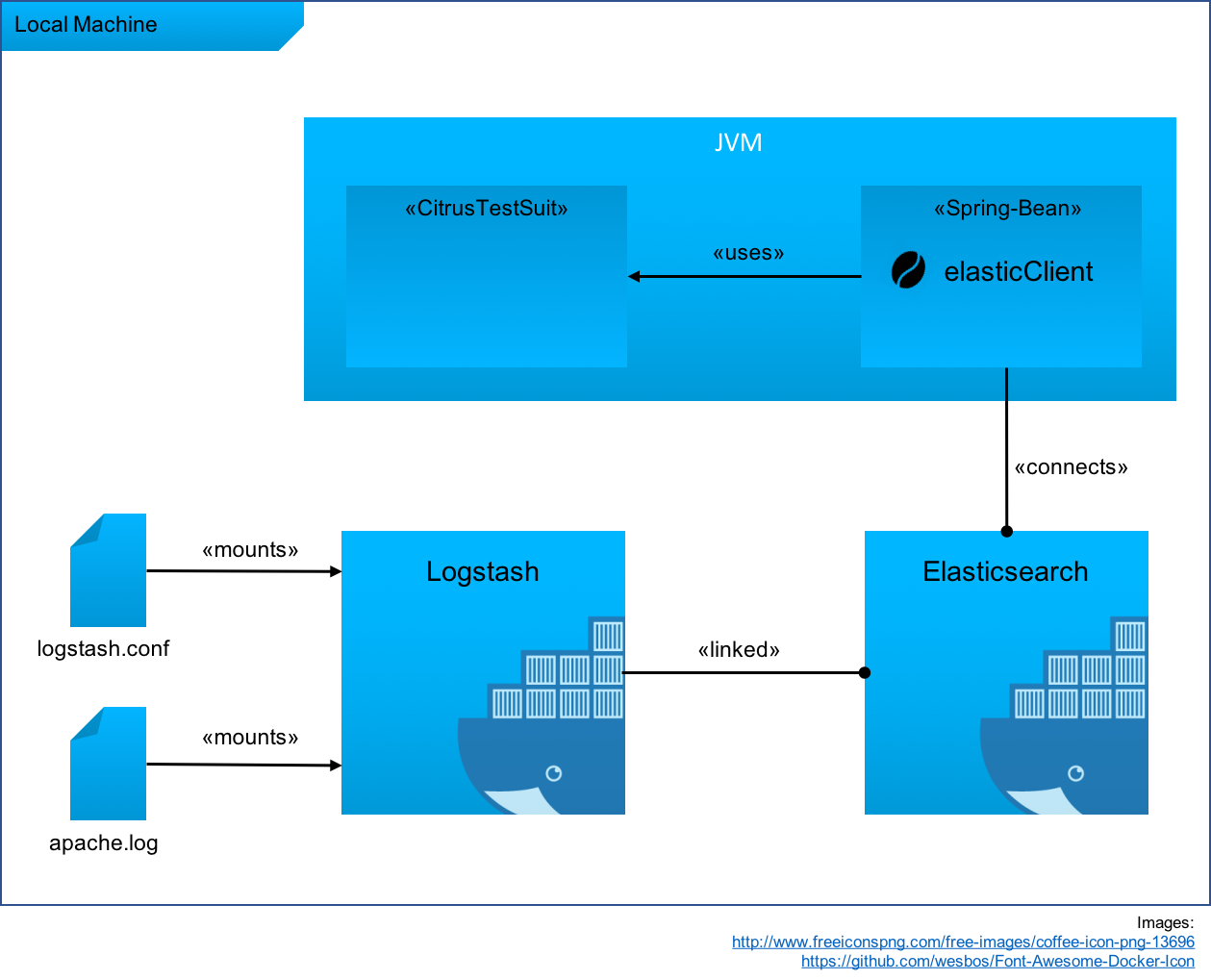

}The basic idea of the setup is outlined in the diagram below:

Especially 3.2 is the step to customize regarding to your needs.

I use the Maven-Docker-Plugin from fabric8io because I made first experience with it. There is also an alternative from Spotify. But these plugin focuses on building and deploying images rather than running images e.g. for tests.

If you are familiar with maven this shouldn’t be a big suprise.

<!-- project/build/plugins -->

<plugin>

<groupId>io.fabric8</groupId>

<artifactId>docker-maven-plugin</artifactId>

<version>0.20.0</version>

<configuration>

<!-- Image configuration goes here -->

</configuration>

</plugin>

<!-- ... --><!-- project/build/plugins/plugin/configuration/images -->

<image>

<alias>elasticsearch</alias>

<name>elasticsearch:alpine</name>

<!-- Configure the run command, compareable to `docker run ...`-->

<run>

<ports>

<!-- elk.elasticsearch.port is configured in project/properties element -->

<!-- Equivalent to -p 1234:9200 -->

<port>${elk.elasticsearch.port}:9200</port>

</ports>

<env>

<!-- Passing the port as a ENV Variabel to the docker container -->

<ELASTICSEARCH_PORT>${elk.elasticsearch.port}</ELASTICSEARCH_PORT>

</env>

<volumes>

<!-- Mount points from our Project into the docker containers file system -->

<!-- Equivalent to -v local_path:docker_path -->

<!-- Mounting Elasticsearch data into mavens target folder makes it possible to control it with maven clean (or not if you like) -->

<bind>

<volume>${project.build.directory}/es-data:/usr/share/elasticsearch/data</volume>

</bind>

</volumes>

<!--

This configuration part is very cool

it defines a conditions to check if a container is succesfully started

In this case it waits for a 200 response from Elasticsearch or a logline which contains `started` at most 30000 miliseconds (unfortunally sometimes it tooks this time)

-->

<wait>

<http>

<url>http://${docker.host.address}:${elk.elasticsearch.port}</url>

</http>

<log>started</log>

<time>30000</time>

</wait>

<!--

Redirects contaienr log into local file. Useful for debugging if the container fails to start

Might be omitted for Production

-->

<log>

<file>${project.build.directory}/elastic.log</file>

</log>

</run>

</image>

<!-- ... -->This configuration is pretty much like executing:

docker run --name elasticsearch -p "$ES_PORT":9200 -v "$MVN_BUILD_DIR"/es-data:usr/share/elasticsearch/data ealsticsearch:alpine<!-- project/build/plugins/plugin/configuration/images -->

<image>

<alias>logstash</alias>

<name>logstash:5</name>

<run>

<!-- Override the docker start cmd and pass the config file as a parameter (-f) to logstash which is started in the container as the entrypoint -->

<cmd>-f /conf-dir/logstash.conf</cmd>

<volumes>

<!-- Mounting the Logstash config source and our test data to the container -->

<bind>

<volume>${project.basedir}/src/main/resources/logstash:/conf-dir</volume>

<volume>${project.basedir}/src/test/resources/logstash:/data</volume>

</bind>

</volumes>

<links>

<!-- Linking the Elasticsearch container to this container and makes it available as a host -->

<!-- Maven plugin recognize a dependency to this container automatically -->

<link>elasticsearch</link>

</links>

<wait>

<!-- Waiting at most 20 seconds on logline which contains `Successfully started Logstash API endpoint` -->

<time>20000</time>

<log>Successfully started Logstash API endpoint</log>

</wait>

<log>

<!-- Like in the Elasticsearch configuration -->

<file>

/Users/timkeiner/Projects/consol/aerzte_der_welt/citrus-tests/target/logstash.log

</file>

</log>

</run>

</image>

<!-- ... -->This configuration is pretty much like executing:

docker run --name logstash --link=elasticsearch -v "$PROJECT_DIR"/src/main/resources/logstash:/conf-dir -v "$PORJECT_DIR"/src/test/resources/logstash:/data logstash:5 -f /conf-dir/logstash.confIn order to start and stop the container at the right time we need a little configuration to tell maven in which phase it should do it.

<!-- project/build/plugins/plugin/executions -->

<executions>

<execution>

<id>start</id>

<!-- Hook into the pre-integration-test phase -->

<!-- Executes plugin goals `build` and `start` which build and start docker containers (suprise, suprise) -->

<phase>pre-integration-test</phase>

<goals>

<goal>build</goal>

<goal>start</goal>

</goals>

</execution>

<execution>

<id>stop</id>

<!-- Hook into the prost-integration-test phase -->

<phase>post-integration-test</phase>

<!-- Executes plugin goal `stop` which stops the containers after the integration tests are executed -->

<goals>

<goal>stop</goal>

</goals>

</execution>

</executions>

<!-- ... -->To getting started with Citrus (and Citrus Java-DSL) just add these dependencies:

<!-- project -->

<dependencies>

<dependency>

<groupId>com.consol.citrus</groupId>

<artifactId>citrus-core</artifactId>

<version>2.7</version>

<scope>test</scope>

</dependency>

<dependency>

<groupId>com.consol.citrus</groupId>

<artifactId>citrus-java-dsl</artifactId>

<version>2.7</version>

<scope>test</scope>

</dependency>

<dependency>

<groupId>com.consol.citrus</groupId>

<artifactId>citrus-http</artifactId>

<version>2.7</version>

<scope>test</scope>

</dependency>

<dependency>

<groupId>org.testng</groupId>

<artifactId>testng</artifactId>

<version>6.5.2</version>

</dependency>

</dependencies>

<!-- ... -->Additionally you would like to add some useful testing plugins:

<!-- project/build/plugins -->

<plugin>

<groupId>com.consol.citrus.mvn</groupId>

<artifactId>citrus-maven-plugin</artifactId>

<version>2.7</version>

<configuration>

<author>Tim Keiner</author>

<targetPackage>org.tnobody.citruselk</targetPackage>

</configuration>

</plugin>

<plugin>

<artifactId>maven-surefire-plugin</artifactId>

<version>2.19.1</version>

<configuration>

<forkMode>once</forkMode>

<failIfNoTests>false</failIfNoTests>

<excludes>

<exclude>**/IT*.java</exclude>

<exclude>**/*IT.java</exclude>

</excludes>

</configuration>

</plugin>

<plugin>

<groupId>org.apache.maven.plugins</groupId>

<artifactId>maven-failsafe-plugin</artifactId>

<version>2.19.1</version>

<executions>

<execution>

<id>integration-tests</id>

<goals>

<goal>integration-test</goal>

<goal>verify</goal>

</goals>

</execution>

</executions>

</plugin>

<!-- ... -->This is all you need to run citrus :)

Citrus offers a Java DSL. With this it’s pretty simple and intuitive to create citrus-tests.

Just create a test class which extends the TestNgCitrusTestDesigner.

// src/test/java/org/tnobody/citruselk/ElkTestIT.java

public class ElkTestIT extends TestNGCitrusTestDesigner {

// ...

}To communicate with the Elasticsearch instance, we need a injectable HttpClient. It must be configured in the citrus context

<?xml version="1.0" encoding="UTF-8"?>

<beans xmlns="http://www.springframework.org/schema/beans">

<citrus-http:client id="elasticClient"

request-url="http://localhost:9200"/>

</beans>With this configuration it is possible to autowire the configured bean into the test class.

@Autowired

private HttpClient elasticClient;Th first test will just verify that we have a connection to the Elasticsearch instance.

Citrus tests define a series of actions. Actions are abstract communication patterns and describes the expected communication flow between systems. So in this test we define the following actions (which remains basically the same pattern for the other tests).

@Test

@CitrusTest(name = "Test Connection")

public void testConnection() {

description("Just a setup test");

http()

.client(elasticClient)

.send()

.get("/")

.accept("application/json")

;

http()

.client(elasticClient)

.receive()

.response(HttpStatus.OK)

.messageType(MessageType.JSON)

;

}In this test we verify that the index my-index is created and can be called via rest-api.

The request method is just a small convinient method to describe the send action.

@Test

@CitrusTest(name = "Test Index creation")

public void testIndexCreation() {

request(elasticClient, "my-index")

.accept("application/json")

;

http()

.client(elasticClient)

.receive()

.response(HttpStatus.OK)

.messageType(MessageType.JSON)

;

}At least we’ll test a small piece of buisness logic / validation ;)

The test will sleep to ensure that Logstash has finished filling the index.

Since the test data contains 1000 events (lines) we assume to have 1000 entries in our index.

From these point you can consider creating more complex Elasticsearch queries and verify the results.

@Test

@CitrusTest(name = "Test Apache Logs")

public void testApacheLog() {

sleep(10000);

request(elasticClient, "/my-index/_count");

http()

.client(elasticClient)

.receive()

.response(HttpStatus.OK)

.messageType(MessageType.JSON)

.validate("$.count", "1000")

;

}Just run it like every other citrus test:

mvn clean installor

mvn clean integration-testIn this section I’ll just write down some ideas. I haven’t test or really verify them right now :)

In the example the actual sources to test are Logstash configurations. Unfortnually for some circumstances the configuration might depend on your test environment (especially in-/output) even with docker :/

Fortunately Logstash can load its configuration from a file or a directory (-f parameter) therefore you can create special input and output configurations for the test environment.

Since -f supports globs you can manage test related and production related files be naming conventions. Another or additional possibility is to compose all test realted config files into a folder using the maven resources plugin and mount this folder to the container.

In smaller setups the use of environment variables might also be helpful.

An easy way of deploying your configuration to a production server would be the use of a continuous integration server which copies (scp) the file to the target server after a successful build (or another trigger).

If Logstash is running with the flag -r or –config.reload.automatic Logstash detects the new config automatically. But: Keep data migration issues in mind!

If the logstash config inputs are not depending on a local file system (e.g. just listen to Filebeat inputs) you could create a custom docker container running Logstash with your configruation and redeploy this container every time the tests succeeded.

Right now we test static data logs and start real instances of Logstash and Elasticsearch. You might want to test dynamic / custom logs for your (dockerized) application. To archieve this you should configure a runnable container of your application in maven. Keep in mind to make the log output available to the Logstash configuration. In citrus you would configure the endpoints for your application and send some requests to it. The application will produce some logout which is processed by Logstash. Than you can verify that the correct data is loaded into Elasticsearch.

Another alternative is to omit the Elasticsearch container. Citrus is capable to mock the the endpoint. In your tests you can define which Logstash put requests would Elasticsearch expect.

As you might notice, the source is published on github